Hello,

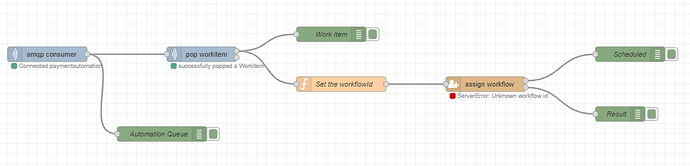

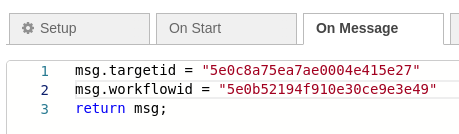

I am trying to assign/start a workflow dynamically through NodeRED using the assign node.

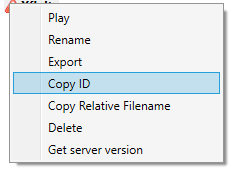

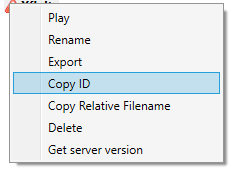

I have copied the workflowid at OpenRPA:

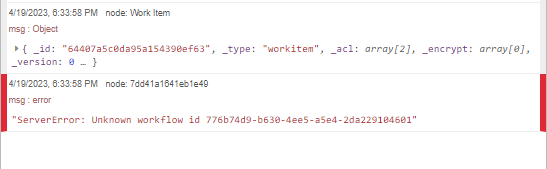

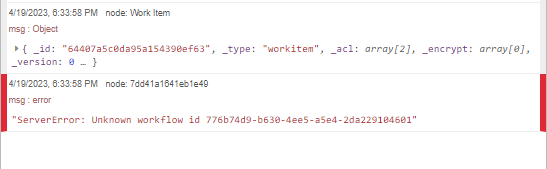

But when I add it to the msg at NodeRed I am getting this error:

Why I am getting this error? I have double checked the workflowid and it is correct.

Thank you all!

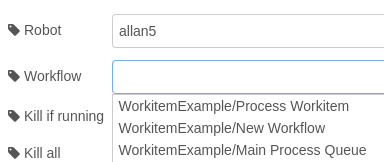

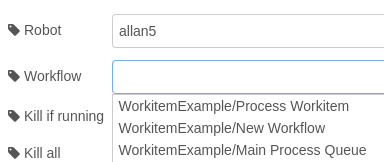

You need to use the RPA node

Then double click the node and using auto complete, select a robot, and then using auto complete select a workflow

Hi Allan,

That’s what I am trying to avoid, because I will have more than 500 different OpenRPA workflows (increasing overtime) and I was thinking about doing it dynamically to avoid adding 500 robot nodes in NodeRED.

There are any different way to do it or is this the recommended way?

I have been reading and implementing Work Item Queues and it is fantastic, thank you so much for that awesome tool!

I am not sure but maybe this could be a new feature:

Have an output Work Item Queue, and create a new NodeRED node like “Dynamic Robot” to assign the workflow dynamically and associate the workflow output (completed, status, failed) to the output Work Item Queue to be consumed by an amqp consumer node.

Regards and thanks so much for your support!

Hey, you helped me find a small ui bug … but let me explain first

If you select the node and click the help tab

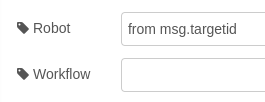

you can see the node can receive infomraiton from the msg object as well

msg.workflowid and msg.targetid

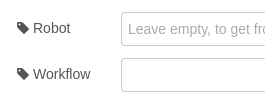

if you drag in a node and don’t select anything

oi select “from msg.targetid”

Then i see i forget to add “from msg.workflowid” in the list for workflows, i will get that added tomorrow, but for now, just drag in a fresh rpa node and dont select anything

then you can set it all using the two msg properties

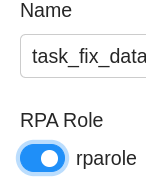

If you set “targetid” to a role instead … for this to work the robot user needs to be added to the role and the role needs to have “rpa” enabled ( you most likely need to logout and backin on the robots for this to take affect )

But if we are talking many robots and/or workflow calls, i would HIGHLY recommend you don’t use the rpa node and use workitem queues instead

Oh, i just reread you post i completly misunderstood

Yes, I will create a new reply with suggestion about that tomorrow .

Also I kind of like the idea with having a queue override like we do with failure/success queues. Worth looking into too

Hi Allan, I am glad I am helping a little bit to this community.

Yes, like you recommend, definitely I need to use some extra work item queues and research more about it, I was trying to make the process/workflow less tedious on the OpenRPA side.

Have you reviewed my recommendation?

Have an output Work Item Queue , and create a new NodeRED node like “Dynamic Robot” to assign the workflow dynamically and associate (push) the workflow output (completed, status, failed) to the output Work Item Queue to be consumed by an amqp consumer node.

I think it will help to decouple and reduce the complexity in OpenRPA side because any output will be pushed automatically to a Work Item Queue, it should be like an rpa (robot) node, but instead to have 3 outputs it will push the output to some queue instead.

Regards and have a good night!

I will waiting for your recommendation about what will be the best way to address my requirement (More than 500 workflows and increasing overtime).

I am looking for keeping the whole/main logic in NodeRED/OpenFLow and only use the OpenRPA to receive the data as arguments and implement the UI Automation without having to communicate directly (using work item activities) with any queue.

Thanks.

I will not create “Dynamic Robot”. But you can make the processing workflow dynamic, if you edit “Main Process Queue” workflow ( assuming you used my workitem example at examples-files/workitems-template at master · open-rpa/examples-files · GitHub ) and change the Invoke OpenRPA to execute a workflow you then defined in the workitem payload.

On workitem queues you can set a “on success” and “on failure” workitem queue it will copy the workitem to. So this has already been made. You can override this per workitem by setting this on the induvidual workitems right before updating it. The idea is you create a queue for each step so you can easily report on where you are and how many item is in each state. More over, you can easily retry things when something goes wrong ( or add queues for handling errors like adding a human in the loop )

RPA node in nodered send a message directly to a robot ( or a group of robots )

the RPA node has NO state, i has no concept of workflow and what status it has, it simply shows a color depending on status messages it gets from the robot.

This node is handy if you need to run a quick workflow, and you can easily send and receive data using arguments. This is not transactional safe, and you WILL loose messages if something goes wrong. Rabbitmq goes down, openrpa crashes before sending success/failur, nodered restarting between start and end, etc.

With workitem’s, you send a message to openflow ( add/push workitem ) and then openflow notifies the robot or group of robots to run something. They pull information about what to do ( pop workitem ) , process it and then send the result back to openflow (success/failure, data ) using Update Workitem. To keep it safe you must wrap the workitem processing from the workitem updating workflow ( see my example above )

If you then need to be notified when the workitem completes inside nodered, you set a success/failure workitem queue and you will then get notified by using a amqp consumer for the queue ( each workitem queue is also an message queue you can consume using amqp consumer node )

On one hand it sounds like you should be using the RPA node ( want to have 500+ workflows and want to control everything from nodered ) but if you really need 500+ workflows it also sounds like you are trying to build something very complex. In that case I would want to have much better control over the flow of data and ability to report on current state, and the option to easily “recover” after failures ( requeueing )

If you want to keep everything in nodered you would need to keep state. Since openflow is database that would be the most obvious place to save the state, but then you might as well use workitem queues, as they where designed exactly for that. To keep state on the process. and notify each actor when they have work to do.

1 Like

Hi Allan,

Thank you so much for taking the time to elaborate your response/recommendation I really appreciate it.

Yes, I will try your example and try to adapt it to the scenario I am trying to set. I was trying to handle the Working Item Queue logic completely from NodeRED, but for now I will include that logic in a main Work Item main process in the Bot as you recommend, in order to dynamically execute a workflow defined in the payload of the work item received from the queue.

Regards and a lot of thank you!