This is a summary change discussion/proposal based on findings related to:

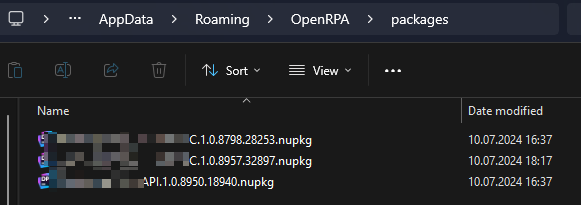

FR: Nuget feed in OpenFlow

(Bug?) Updating dependency leaves loaded dlls unchanged

I’ll try to lay down the train of thoughts, so it’s easier to see why the conclusions are as they are.

Please find below a short list of the main issues, from a user/deployer perspective:

Issue 1:

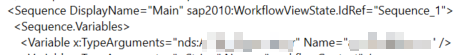

OpenRPA loads all dll’s from the extensions folder on application start.

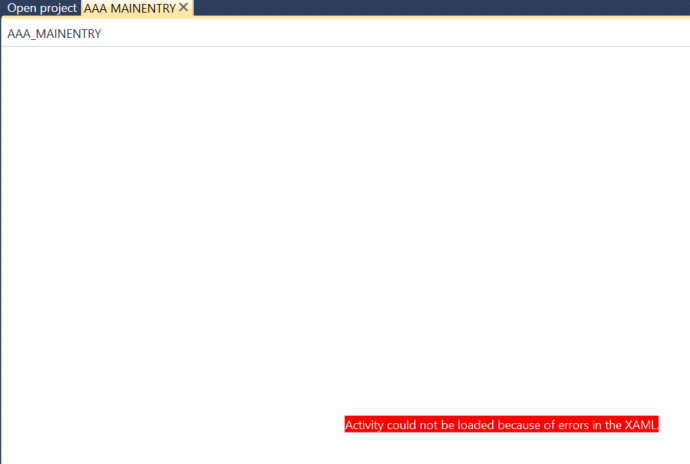

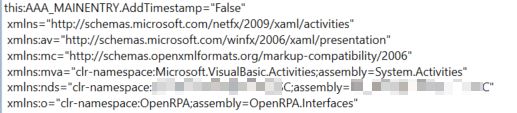

This leads to extensions dll’s being locked for update (changing the dependency version in a project does not change the actual dll in extensions), which leads to the update not doing what it’s supposed to and leads to invalid workflows.

Note: this does not occur when a dependency has been added in the same run of OpenRPA, as it can then actually remove the file (process still has ownership of it).

Issue 2:

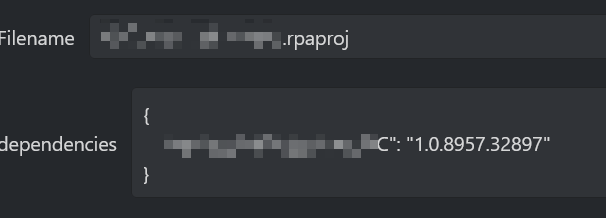

OpenRPA does not ensure that dependency projects are actually present and loaded for available projects that have a dependency change (it does on project manual import).

This leads to missing dependencies when remotely deploying projects with package dependencies.

Issue 3:

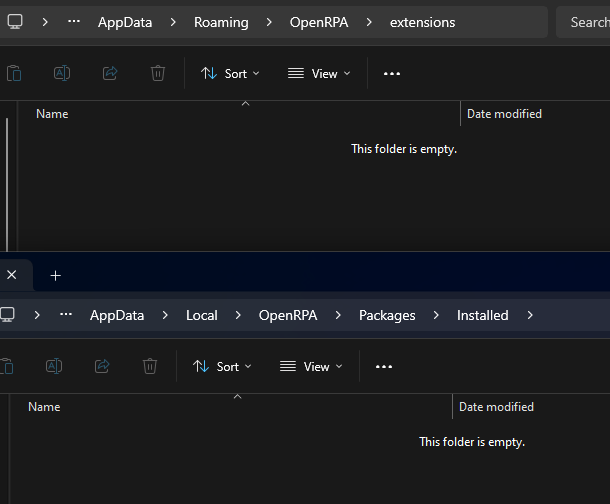

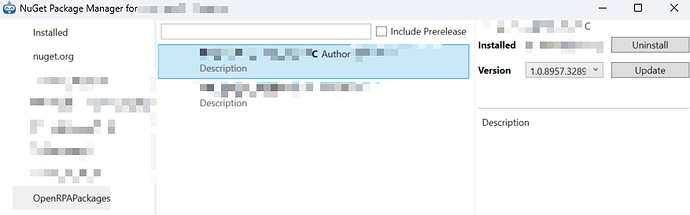

OpenRPA Package Manager only allows for changing dependencies of the projects, but not installing the dependencies on the local machine, and all dependencies show as Installed.

This means that if a package is added to a project as a dependency, Package Manager shows it as Installed, even though it’s completely missing from the machine. To actually install it, the user has to either install or update the package (and thus alter the project), it does not give the possibility to do an equivalent of a nuget restore for available projects.

Issue synthesis:

In summary, the only way to actually install the extension on your machine is to either manually put correct dll’s into the extensions when OpenRPA is closed, or “update” the project dependencies (requires update rights to the project).

Solution approach:

Since reworking OpenRPA to be multi-process or multi-appdomain is not worth the gain just for extensions (it’s a niche use case ![]() ), and “external” solutions are at best bandaid hacks, the solution should most likely center around NugetPackageManager and how (when) the

), and “external” solutions are at best bandaid hacks, the solution should most likely center around NugetPackageManager and how (when) the extensions are loaded.

Addition 1:

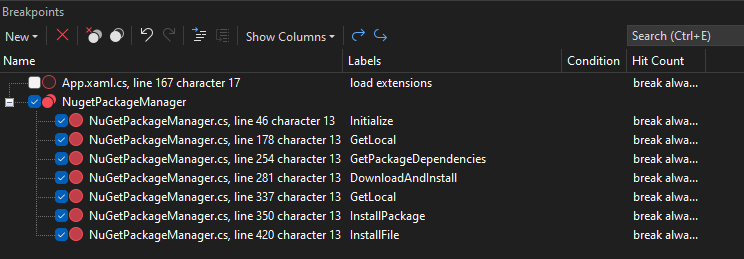

Add a nuget restore equivalent

All of the functionality on the NugetPackageManager side is already there, it should only require adding a new call path to install the packages without altering the projects.

If I’m reading the code correctly, it could work similar to this (a bit hacky, but ehh):

private bool InstallAllProjectDependencies()

{

var allDependencies = new Dictionary<string, string>();

foreach (var project in availableProjects

.Where(p => p.dependencies != null && project.dependencies.Count > 0))

{

Log.Debug($"Found dependencies on project: {project.name}");

foreach (var newDependency in project.dependencies)

{

if (allDependencies.ContainsKey(newDependency.Key))

{

if (allDependencies[newDependency.Key] != newDependency.Value)

{

// could have a better errMsg

string errMsg = $"Dependency clash for {newDependency.Key}: {newDependency.Value}.";

Log.Error(errMsg);

return false;

}

} else {

Log.Debug($"Added dependency to load: {newDependency.Key}: {newDependency.Value}");

allDependencies[newDependency.Key] = newDependency.Value;

}

}

}

foreach (var kvp in allDependencies)

{

// yanked from Project.cs

foreach (var jp in allDependencies)

{

var ver_range = VersionRange.Parse(jp.Value);

if (ver_range.IsMinInclusive)

{

Log.Information("DownloadAndInstall " + jp.Key);

var target_ver = NuGet.Versioning.NuGetVersion.Parse(ver_range.MinVersion.ToString());

await NuGetPackageManager.Instance.DownloadAndInstall(null, new NuGet.Packaging.Core.PackageIdentity(jp.Key, target_ver), true); // can call with project = null as it's not used there? Otherwise create a dummy throwaway project before the loop

}

}

}

return true;

}

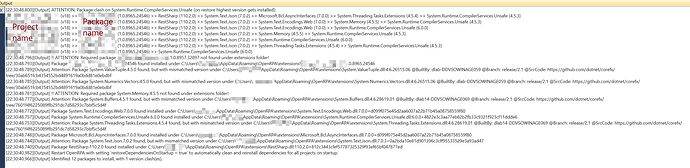

When to call it:

Potentially this could be called instead of the current extension assembly loading in app.xaml, although I’m not sure if the projects are available at that point.

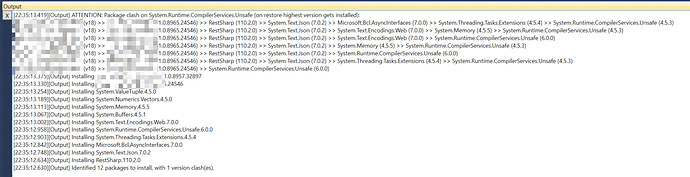

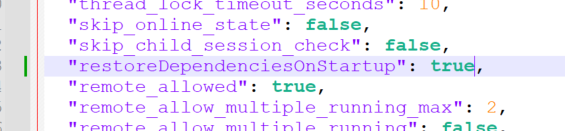

Or, the LoadDll’s parameter could be changed to false and call it before the extensions loading. That way it would do an auto-restore before loading the extensions (config flag default false? → set to true for end users/robots?).

Aside of that, I think it would be good to add a call like that (with true for load) on user demand (either on project level or for all, the code is very similar). That way, the user (dev) would have good control of what’s happening when.

Summary:

I think adding an auto-restore on startup would “fix” most of the extensions version management issues related to this.

So… thoughts?

If this sounds reasonable, I’ll be happy to proceed with seeing if it actually works as intended (at least the manual restore part, not sure about the auto-restore part on startup).

As in - I can add it and PR it in if it works, so you don’t have to worry about this for now ![]() I just need an opinion if this is a change that you’re open to before diving into code.

I just need an opinion if this is a change that you’re open to before diving into code.

For now, something like this manual restore + the PackageRestore workflow from the OpenFlow Nuget attempt should be a good workaround.